Robots txt

Enter the URL of the website to check robots.txt.

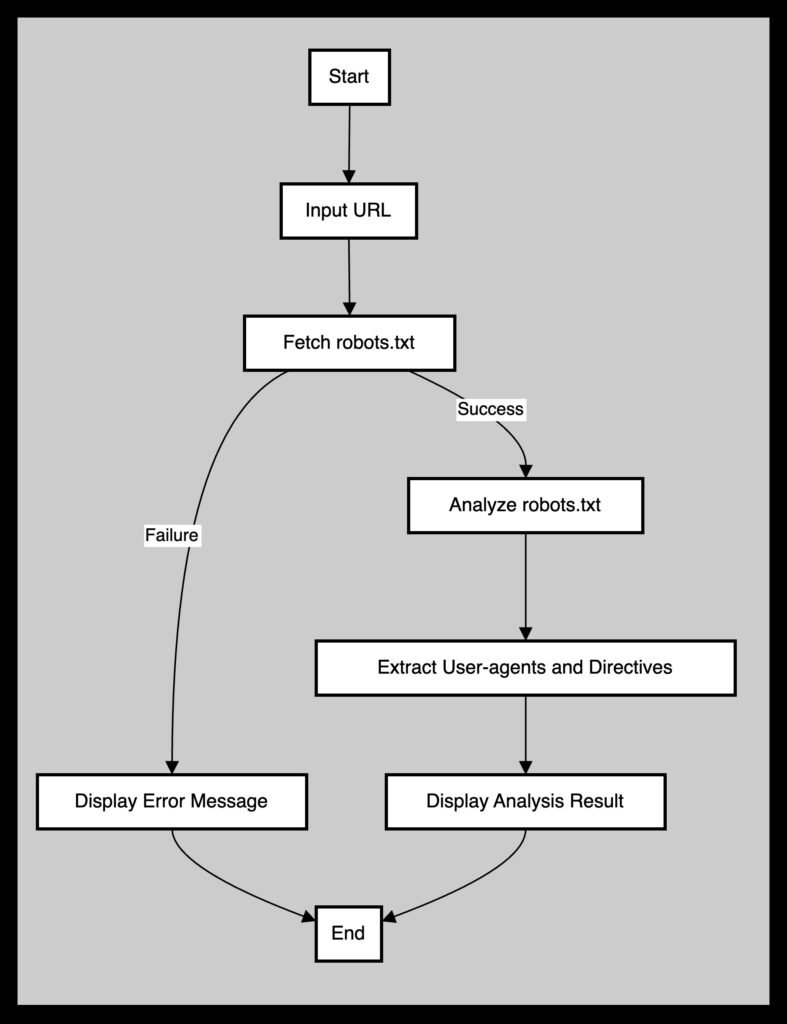

Explanation of the Program

The provided Python script analyzes the robots.txt file of a specified website. It fetches the robots.txt file using the given URL, checks for successful retrieval, and processes its content. The script identifies and categorizes directives for different user agents, indicating which parts of the website are allowed or disallowed for crawling by search engines. This ensures that relevant content is accessible for indexing while sensitive or non-essential areas are properly blocked.

Key Components of the Script

- Fetching the robots.txt File:

The script appends/robots.txtto the base URL of the website to retrieve the file. It uses therequestslibrary to perform an HTTP GET request. If the request is successful (HTTP status code 200), it returns the content of therobots.txtfile. If the retrieval fails, it outputs an error message indicating the status code and reason. - Analyzing the Content:

Once therobots.txtfile is fetched, the script processes its content by splitting it into lines. It identifies user agents and their corresponding directives (e.g.,AllowandDisallow). This categorization helps determine which parts of the website are accessible to search engines. - Outputting Results:

After analyzing the content, the script prints the directives for each user agent. This provides a clear overview of the crawling permissions set in therobots.txtfile.

Showcase Your SEO Success with API-Powered Robots.txt Insights!

Understanding Robots.txt Files

What is a Robots.txt File?

A robots.txt file is a text document placed at the root of a website that instructs web crawlers on how to interact with the site’s content. It plays a crucial role in SEO by regulating which parts of a website can be indexed by search engines, ultimately influencing how the site appears in search results.

Importance of Robots.txt for SEO

- Optimize Crawl Budget

Search engines have a limited crawl budget for each website. By usingrobots.txt, you can guide crawlers to focus on your most important pages, improving overall efficiency and ensuring that valuable content is indexed. - Block Duplicate and Non-Public Pages

Therobots.txtfile allows you to block search engines from accessing duplicate content or pages that are not meant for public visibility, such as staging or administrative pages. - Hide Resources

You can prevent search engines from crawling specific resources, which can be beneficial for protecting sensitive data or controlling the visibility of certain files.

How to Create a Robots.txt File

Creating a robots.txt file involves a few simple steps:

- Create a File and Name It Robots.txt This file should be placed in the root directory of your website.

- Add Directives to the Robots.txt File Specify which user agents (search engines) can access which parts of your site using directives like

AllowandDisallow. - Upload the Robots.txt File Ensure it is accessible at

www.example.com/robots.txt. - Test Your Robots.txt File Use tools to verify that it is correctly configured.

Best Practices for Robots.txt

- Use a New Line for Each Directive This keeps your file organized and easy to read.

- Use Each User-Agent Only Once This prevents conflicting rules within your file.

- Utilize Wildcards Wildcards can clarify directions for crawlers.

- Include Comments Use the hash symbol (

#) to add notes for future reference. - Maintain Error-Free Files Regularly check your

robots.txtfor errors to ensure compliance with your crawling strategy.

Common Mistakes to Avoid

- Not Including Robots.txt in the Root Directory Ensure your file is located at the correct URL.

- Using Noindex Instructions in Robots.txt This is not a valid directive for

robots.txt. - Blocking JavaScript and CSS Doing so can hinder the rendering of your site.

- Not Blocking Access to Unfinished Pages Protect sensitive or incomplete content.

- Using Absolute URLs Stick to relative URLs to avoid confusion.

Conclusion

A well-configured robots.txt file is essential for effective SEO management. By following best practices and avoiding common pitfalls, you can maximize your site’s visibility while safeguarding sensitive information. Regularly reviewing and updating your robots.txt file ensures that it aligns with your website’s goals and user needs.

Versatel Networks API Backend Service

Utilizing the provided script significantly enhances your API backend services by enabling the creation of specific endpoints tailored to customer needs. This API can analyze robots.txt files and return structured insights about website configurations. However, the benefits extend far beyond just SEO optimization or robots.txt management.

Comprehensive Solution Beyond SEO

Utilizing the provided script significantly enhances our API backend services by enabling the creation of specific endpoints tailored to customer needs. This API can analyze robots.txt files and return structured insights about website configurations. However, the benefits extend far beyond just SEO optimization or robots.txt management.

Comprehensive Solution Beyond SEO

By leveraging a simple front-end interface, we make it easy for users to obtain results based on their robots.txt file analysis. This capability not only streamlines operations but also provides valuable insights into website configurations, making it an attractive offering for clients looking to optimize their online presence.

Transforming Ideas into Actionable Tools

Moreover, we can help clients transform their ideas into practical API tools. Whether you are looking to enhance user experience, improve site performance, or implement new features, we can develop customized API solutions that align with specific business objectives. This means that you are not just limited to SEO strategies but can explore a wide range of functionalities tailored to your unique requirements.

Empowering Clients

By integrating this functionality into our API services, we empower clients to manage their digital strategies more effectively. This includes ensuring that their web content is indexed according to their preferences while also providing the flexibility to innovate and adapt as their needs evolve. Our approach is to facilitate the creation of comprehensive API tools that address various aspects of web management, making it easier for clients to achieve their goals.

In summary, we offer more than just SEO insights; we provide the technology and support to help clients turn their concepts into valuable, actionable APIs that enhance their overall online strategy.