In the rapidly evolving digital landscape, web scraping has become an indispensable tool for data acquisition. However, as websites implement increasingly sophisticated anti-scraping measures, developers face growing challenges. At Versatel Networks, we’ve encountered and overcome numerous obstacles in our web scraping projects. This article shares our experiences and insights, with a particular focus on CAPTCHA solving techniques.

Understanding CAPTCHA and Its Impact on Web Scraping

CAPTCHA, an acronym for “Completely Automated Public Turing test to tell Computers and Humans Apart,” is a security measure designed to differentiate between human users and automated bots on the internet. Developed in the late 1990s by researchers at Carnegie Mellon University, CAPTCHAs serve as a form of Turing Test, aiming to ensure that a task can be completed by a human but not by a computer.

CAPTCHAs typically present users with a challenge that may involve identifying distorted or obscured characters, selecting specific images, solving mathematical problems, or interacting with audio recordings. The main goal of this technology is to prevent spam, automated submissions, and abuse of online services, thereby enhancing overall web security.

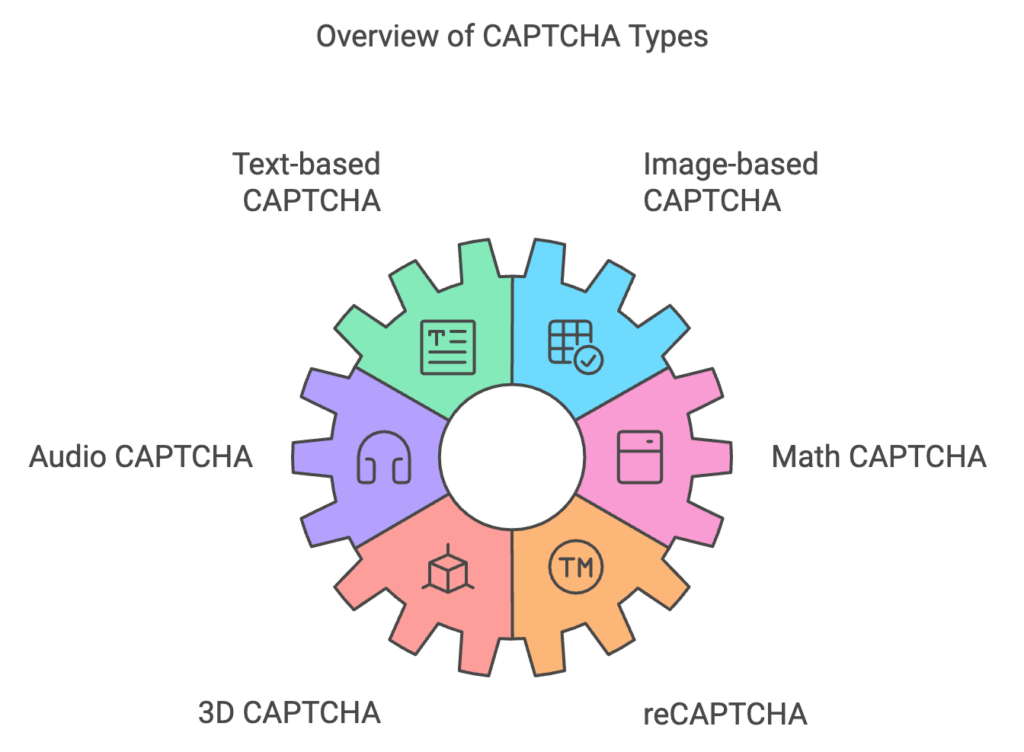

Types of CAPTCHA

Various forms of CAPTCHA have evolved over the years to adapt to advances in technology and to address the increasing sophistication of automated bots:

- Text-based CAPTCHA: These present users with distorted text that they must manually input to prove they are human. They often feature wavy or noisy backgrounds that make it difficult for automated systems to recognize the text.

- Image-based CAPTCHA: These challenges require users to select specific images or parts of images based on given criteria.

While CAPTCHAs serve a crucial role in protecting websites from malicious bots, they also present significant hurdles for legitimate web scraping activities.

Technical Challenges in Web Scraping

Anti-Scraping Measures

Websites increasingly employ sophisticated anti-scraping technologies designed to thwart automated data extraction efforts. These measures can include CAPTCHAs, rate limiting, IP blocking, and requiring user authentication. Consequently, scrapers must adopt advanced techniques to mimic human behavior and evade detection. Developing human-like browsing patterns has emerged as a critical strategy, involving methods such as session management, simulating click patterns, and varying scroll behaviors.

CAPTCHA Handling

Handling CAPTCHAs remains one of the most daunting challenges for scrapers. These automated tests, designed to differentiate between humans and bots, require scrapers to implement effective bypass techniques or employ CAPTCHA-solving services. The ability to navigate these challenges can significantly impact the success of scraping endeavors.

Advanced Techniques in Web Scraping

Advanced techniques in web scraping involve the use of headless browsers and Document Object Model (DOM) parsing. Headless browsers, such as Puppeteer and Selenium, execute without a graphical user interface, allowing for interaction with dynamic web content generated by AJAX and JavaScript. This makes it possible to extract data that may not be immediately visible in the HTML code. Combining headless browsers with DOM parsing allows for efficient extraction from complex websites.

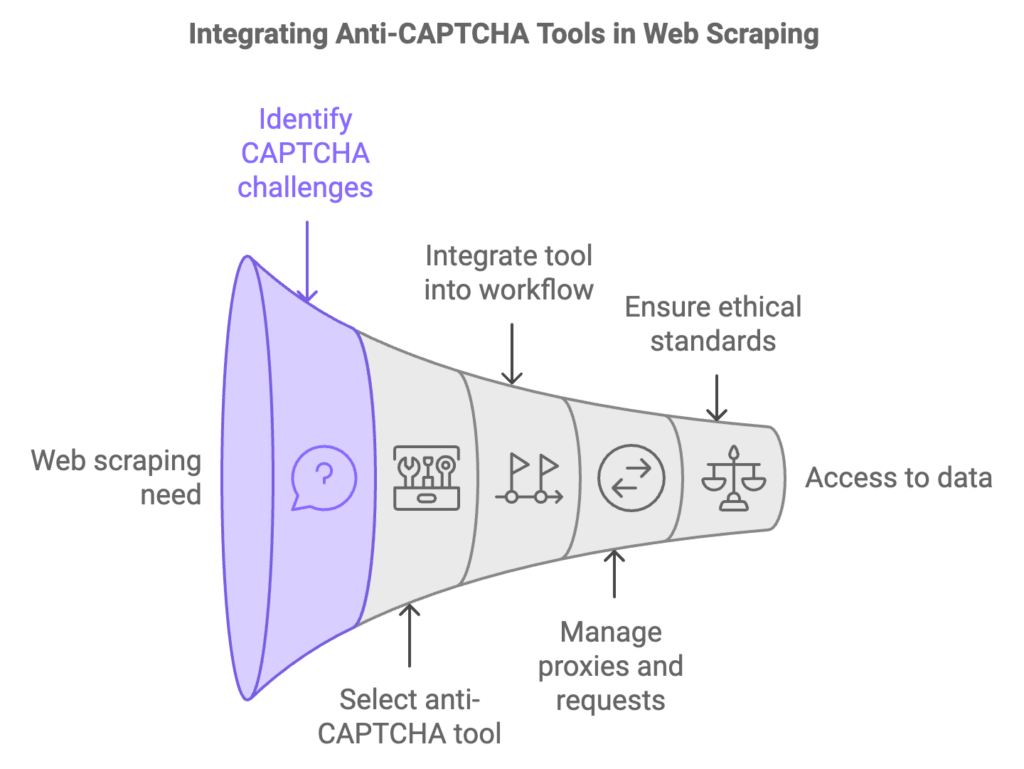

Anti-CAPTCHA Libraries and APIs

Numerous libraries and APIs exist that provide automated CAPTCHA-solving capabilities. These tools employ advanced algorithms to analyze and solve CAPTCHAs, which can be integrated into scraping workflows to automate the process effectively. Dedicated scraping platforms like ScrapingBee offer a comprehensive suite of tools that facilitate interactions with CAPTCHA-protected sites, addressing issues such as headless browsing, proxy management, and request throttling. By leveraging these tools and frameworks, web scrapers can navigate the complexities of CAPTCHAs more effectively, ensuring that they maintain access to valuable data while adhering to ethical and legal standards.

Our Approach to CAPTCHA Solving at Versatel Networks

At Versatel Networks, we’ve developed a multi-faceted approach to tackle CAPTCHAs effectively:

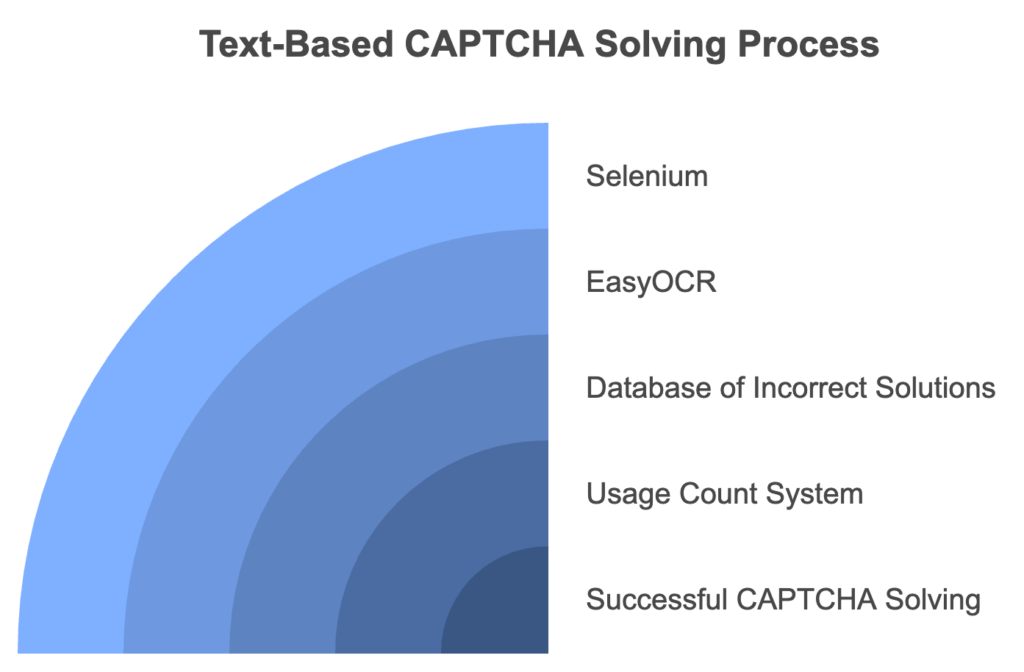

1. Text-Based CAPTCHA Solving

For text-based CAPTCHAs, we’ve found success using a combination of Selenium and EasyOCR. Here’s our process:

- We use Selenium to interact with the website and capture the CAPTCHA image.

- EasyOCR is then employed to read the text from the captured image.

- To improve accuracy, we maintain a database of incorrectly solved CAPTCHAs for further analysis and manual identification.

- We implement a usage count system to track how often each solution is used, helping us refine our model over time.

- Versatility: The combination of Selenium and EasyOCR provides a flexible solution adaptable to various text-based CAPTCHAs.

- Continuous improvement: The database of incorrectly solved CAPTCHAs allows for ongoing refinement and learning.

- Efficiency tracking: The usage count system helps identify the most effective solutions over time.

- Scalability: This approach can be easily scaled to handle a large volume of CAPTCHAs.

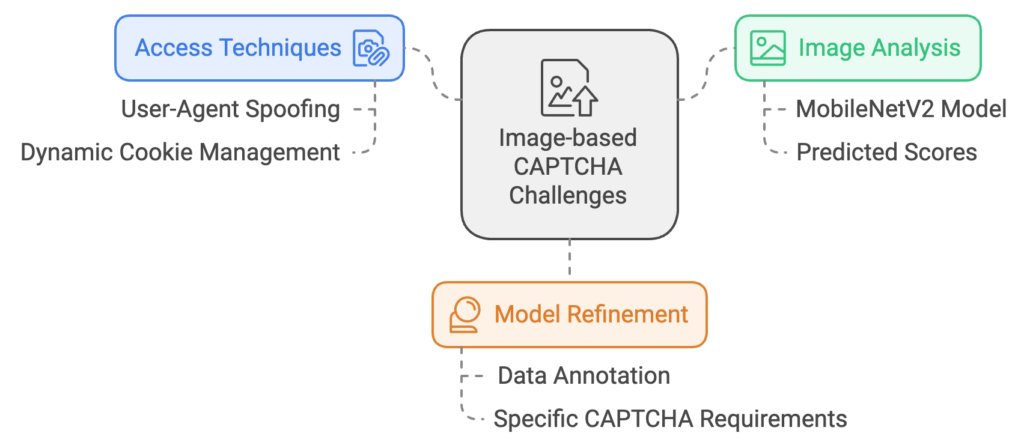

2. Image-Based CAPTCHA Solving

Image-based CAPTCHAs present a different set of challenges. Our approach includes:

- Using techniques like User-Agent spoofing and dynamic cookie management to access CAPTCHA images.

- Employing TensorFlow’s MobileNetV2 model for initial image analysis:

import tensorflow as tf

model = tf.keras.applications.MobileNetV2(weights='imagenet')

- Utilizing the model’s predicted scores to identify objects in the images.

- Conducting further data annotation to refine the model for specific CAPTCHA requirements.

- Advanced techniques: User-Agent spoofing and dynamic cookie management demonstrate a sophisticated approach to accessing CAPTCHA images.

- Powerful image analysis: Utilizing TensorFlow’s MobileNetV2 model provides robust image recognition capabilities.

- Adaptability: The approach allows for further data annotation, enabling customization for specific CAPTCHA types.

- Cutting-edge technology: Leveraging deep learning models like MobileNetV2 keeps the solution at the forefront of image recognition technology.

3. Hybrid Approach: Chrome Debug Mode and Jupyter Notebook

We’ve found that combining Chrome’s debug mode with Jupyter Notebook provides a powerful environment for analyzing and solving complex CAPTCHAs:

- We use Chrome’s debug mode to study the DOM and CAPTCHA step-by-step:

/Applications/Google\ Chrome.app/Contents/MacOS/Google\ Chrome --user-data-dir=/Users/username/Documents/chrome-driver-new-profile --remote-debugging-port=9222

- Jupyter Notebook is used for line-by-line execution of our Python scripts, allowing for real-time adjustments and analysis.

Overall advantages

- Comprehensive approach: The combination of text-based and image-based solutions covers a wide range of CAPTCHA types.

- Iterative improvement: Both approaches incorporate methods for continuous refinement and adaptation.

- Technical sophistication: The use of advanced tools and techniques demonstrates a high level of expertise in CAPTCHA solving.

- Customizability: Both methods allow for tailoring to specific CAPTCHA challenges encountered on different websites.

Lessons Learned and Best Practices

Through our experiences, we’ve identified several key factors for successful web scraping:

- Data is King: The success and speed of CAPTCHA solving often depend on the size and quality of your dataset. Continuously collecting and annotating CAPTCHA images is crucial.

- Adaptive Techniques: Websites frequently update their CAPTCHA systems. Stay adaptable by regularly reviewing and updating your solving techniques.

- Ethical Considerations: Always respect website terms of service and consider the ethical implications of your scraping activities, including server load and user privacy.

- Legal Compliance: Stay informed about relevant laws and regulations to ensure your web scraping activities remain within legal boundaries.

- Custom Model Training: For more complex CAPTCHAs, consider training custom models using tools like Tesseract OCR to improve recognition accuracy.

Conclusion

Web scraping, particularly when dealing with CAPTCHAs, requires a comprehensive approach combining various technologies and strategies. At Versatel Networks, we continually refine our methods to stay ahead of evolving challenges. By sharing our experiences, we hope to contribute to the broader community’s understanding and capabilities in this critical area of data acquisition.

Remember, while these techniques can be powerful, it’s essential to use them responsibly and ethically, always respecting website policies and user privacy. As the landscape of web scraping and CAPTCHA technology continues to evolve, staying informed and adaptable will be key to successful and ethical data extraction practices.