Web scraping has become an essential tool for businesses, researchers, and developers looking to extract valuable data from the web. However, as websites become more sophisticated, so do the techniques required to scrape them effectively. This article explores advanced web scraping techniques, including handling JavaScript-rendered content, using headless browsers, and managing proxies.

1. Understanding Web Scraping

Web scraping is the process of automatically extracting information from websites. This can involve pulling data from static HTML pages, but many modern websites use JavaScript to dynamically load content, which presents unique challenges for scrapers. As a result, effective web scraping requires a deep understanding of various technologies and methodologies.

2. Handling JavaScript-Rendered Content

Many websites now leverage JavaScript frameworks like React, Angular, and Vue.js to render content. This can make traditional scraping methods ineffective, as the data may not be present in the initial HTML response.

2.1 Techniques for Scraping JavaScript-Rendered Content

2.1.1 Use of Headless Browsers

Headless browsers simulate real user interactions in a browser environment without a graphical user interface. These tools execute JavaScript, allowing scrapers to access dynamically loaded content. Popular headless browsers include:

- Puppeteer: A Node.js library that provides a high-level API to control Chrome or Chromium over the DevTools Protocol.

- Playwright: An alternative to Puppeteer that supports multiple browsers (Chromium, Firefox, WebKit) and provides more features for testing and scraping.

Example: Using Puppeteer to Scrape a JavaScript-Rendered Page

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://example.com');

// Wait for a specific element to ensure the content is loaded

await page.waitForSelector('#content');

const data = await page.evaluate(() => {

return document.querySelector('#content').innerText;

});

console.log(data);

await browser.close();

})();

2.1.2 API Interception

Sometimes, the data rendered on a website is fetched from a backend API. By intercepting these API requests, scrapers can directly access the data without having to render the entire page.

Example: Using Puppeteer to Intercept API Requests

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.setRequestInterception(true);

page.on('request', (request) => {

if (request.url().includes('api/data')) {

request.continue();

} else {

request.abort();

}

});

await page.goto('https://example.com');

await page.waitForResponse(response => response.url().includes('api/data'));

const data = await page.evaluate(() => {

return JSON.parse(document.querySelector('body').innerText);

});

console.log(data);

await browser.close();

})();

2.2 Using Selenium for JavaScript-Rendered Content

Selenium is another popular tool for automating web browsers. It can interact with web elements just like a human user, making it ideal for scraping JavaScript-heavy websites.

Example: Scraping with Selenium

from selenium import webdriver

driver = webdriver.Chrome()

driver.get('https://example.com')

# Wait for the content to load

driver.implicitly_wait(10)

data = driver.find_element_by_id('content').text

print(data)

driver.quit()

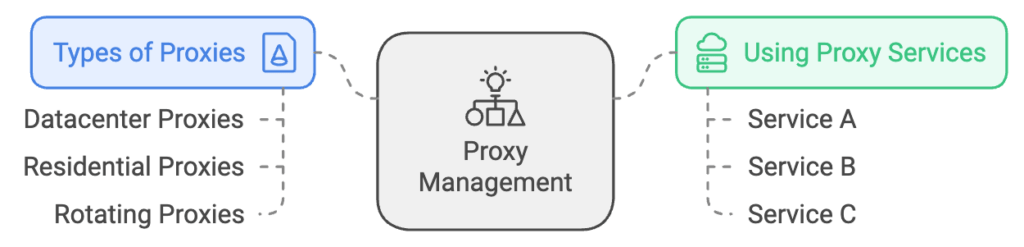

3. Proxy Management

Web scraping can often lead to IP bans, especially when scraping large volumes of data. To mitigate this, effective proxy management is crucial.

3.1 Types of Proxies

- Datacenter Proxies: Fast and cost-effective, but easily detectable and often blocked by websites.

- Residential Proxies: IP addresses provided by Internet Service Providers (ISPs), making them appear as legitimate users. These are harder to detect and block.

- Rotating Proxies: Automatically change IP addresses at set intervals or after each request, reducing the risk of bans.

3.2 Using Proxy Services

Several services provide proxy management solutions, including:

- Bright Data (formerly Luminati): A well-known residential proxy provider with a large pool of IP addresses.

- ScraperAPI: Handles proxy rotation, retries, and IP banning issues.

- Oxylabs: Offers both residential and datacenter proxies with a user-friendly interface.

3.3 Implementing Proxy Rotation

When implementing proxy rotation, it’s crucial to handle errors gracefully. Use a pool of proxies and switch them upon receiving HTTP error codes indicating a ban (like 403 Forbidden).

Example: Using Requests with Proxy Rotation in Python

import requests

import random

proxies = [

'http://proxy1.com:port',

'http://proxy2.com:port',

'http://proxy3.com:port',

]

def fetch_data(url):

proxy = random.choice(proxies)

response = requests.get(url, proxies={'http': proxy, 'https': proxy})

if response.status_code == 200:

return response.text

else:

print(f"Failed to fetch data using {proxy}: {response.status_code}")

data = fetch_data('https://example.com')

print(data)

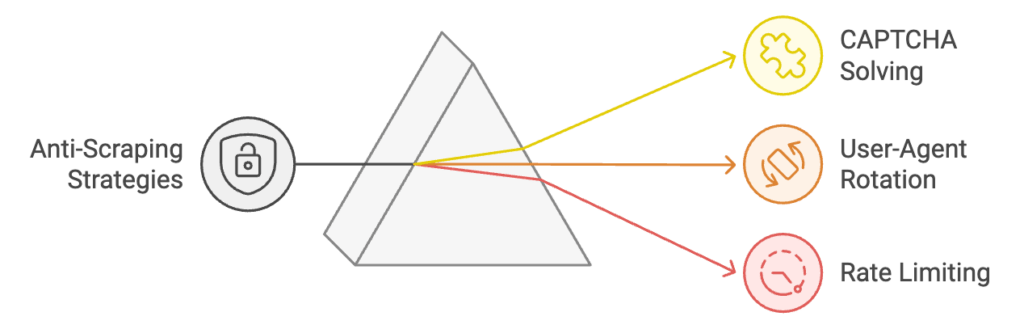

4. Handling Anti-Scraping Measures

Websites employ various techniques to protect against scraping, including CAPTCHAs, rate limiting, and bot detection. Here are some strategies to overcome these challenges:

4.1 CAPTCHA Solving

Using CAPTCHA solving services can help bypass these barriers. Services like 2Captcha and Anti-Captcha provide APIs to solve CAPTCHAs automatically.

4.2 User-Agent Rotation

Changing the User-Agent string in your requests can help simulate requests from different browsers, reducing the likelihood of detection.

Example: Rotating User-Agents in Python

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/14.0.1 Safari/605.1.15',

]

headers = {

'User-Agent': random.choice(user_agents)

}

response = requests.get('https://example.com', headers=headers)

4.3 Rate Limiting

Implementing delays between requests can prevent your scraper from overwhelming the server and getting temporarily banned. Use libraries like time in Python to add sleep intervals.

import time

for url in urls:

fetch_data(url)

time.sleep(random.uniform(1, 3)) # Sleep for a random time between 1 and 3 seconds

5. Ethical Considerations in Web Scraping

While web scraping can provide valuable data, it’s essential to respect the legal and ethical boundaries. Here are some guidelines:

- Read the Robots.txt File: Check the website’s

robots.txtfile to see which pages are allowed or disallowed for scraping. - Respect Terms of Service: Always review and adhere to the website’s terms of service regarding data usage.

- Avoid Overloading Servers: Implement rate limiting to avoid causing performance issues for the target website.

Conclusion

Advanced web scraping techniques are crucial for efficiently extracting data from the modern web. By mastering tools like headless browsers, managing proxies effectively, and employing strategies to handle JavaScript-rendered content and anti-scraping measures, you can become a proficient web scraper. Always remember to scrape ethically and responsibly, respecting the rights of website owners and users alike.

By employing these techniques and maintaining a strong ethical framework, you can navigate the complex landscape of web scraping while ensuring your projects are successful and sustainable.

Struggling to Extract Data from Challenging Websites?

If you’re facing difficulties using advanced web scraping techniques to access data from your targeted website, don’t worry! Our expert team is here to help. We specialize in overcoming complex scraping challenges, including handling JavaScript-rendered content and navigating anti-scraping measures.

Additionally, we offer CAPTCHA solving services to seamlessly bypass those pesky protections, ensuring you get the data you need.

Contact us today to discuss your web scraping needs, and let’s unlock the data together!

Related Articles: