Challenges in Mimicking Human Behavior

Mimicking human behavior online poses several complexities and challenges, particularly in the context of web scraping and automation. With the advancement of bot detection technologies, creating scripts that convincingly simulate human interactions is becoming increasingly difficult.

Detection Mechanisms

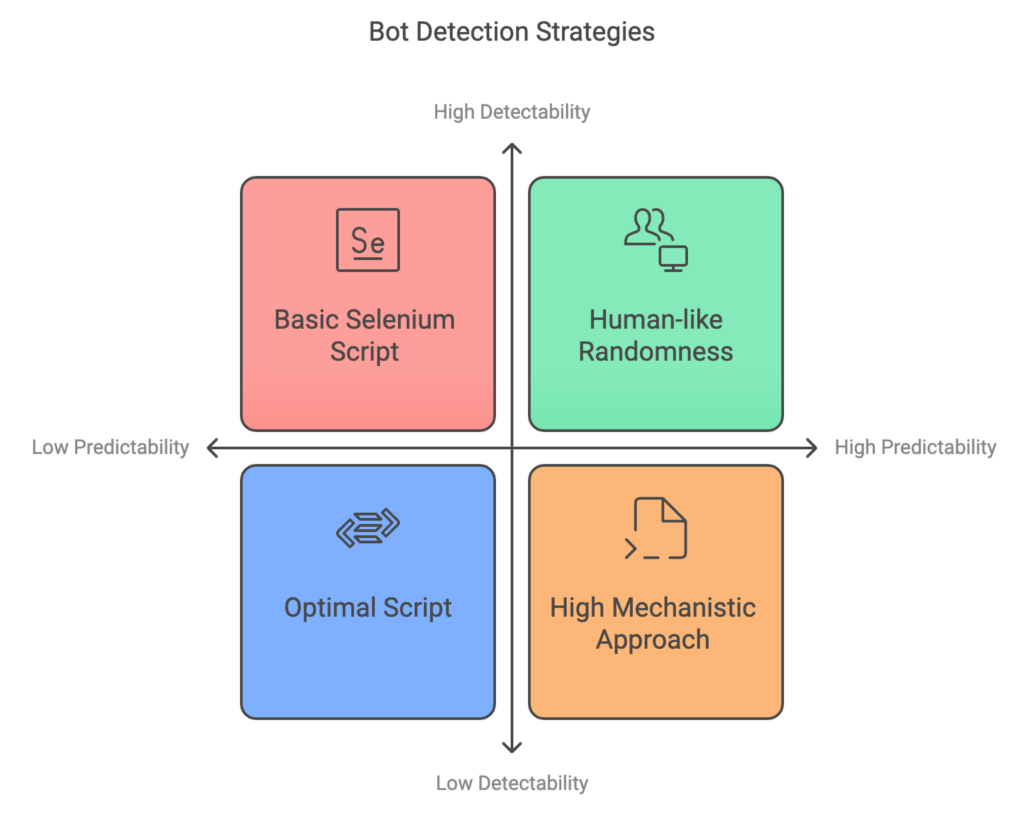

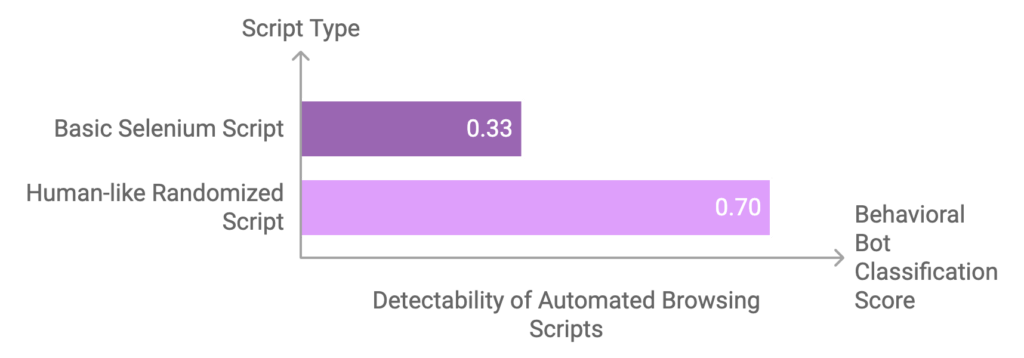

Websites have implemented sophisticated mechanisms to detect automated browsing activities. These systems often analyze behavioral patterns, such as scrolling and clicking behaviors, to differentiate between human users and bots. For example, a basic Selenium script may generate predictable scrolling patterns, leading to a low behavioral bot classification score of 0.33, which indicates high detectability due to its mechanistic approach. As such, developers must adapt their scripts to incorporate elements of human-like randomness and irregularity.

Human-like Interaction Simulation

To effectively avoid detection, automated scripts must convincingly mimic human interactions.

- Randomized Clicks and Movements: Just as humans do not consistently click on the exact same spot, automated tools should introduce variability in click positions and movement paths. For instance, employing JavaScript libraries like Puppeteer allows for randomization of click locations, making automated actions appear more organic.

- Non-linear Scrolling and Delays: Introducing random delays and simulating non-linear scrolling behavior can further disguise bot activity. This is crucial for creating a semblance of natural user behavior, as human users often scroll and navigate through web pages in a less predictable manner.

- Handling JavaScript and CAPTCHAs: Many websites deploy JavaScript challenges and CAPTCHAs to thwart bots. Preparing scraping bots to handle these challenges, either through solving services or other techniques, is essential to ensure smooth operation.

Behavioral Predictability

Understanding the predictability of human behavior adds another layer of complexity. Research indicates that human actions are influenced by various contextual factors, such as time, location, activity, and social ties. When scrapers fail to account for these modalities, their actions may become easily identifiable as automated. The predictability of behavior is heightened when additional contextual information is available, which suggests that a more nuanced approach to simulating human interactions is necessary.

Techniques for Mimicking Human Behavior

Mimicking human behavior during web scraping is essential to evade detection by anti-bot mechanisms and to comply with website terms of service. Several strategies have been developed to achieve this, particularly when using tools like Selenium, Puppeteer, or Playwright.

Randomized User Interactions

Random Clicks and Mouse Movements

One effective approach is to randomize mouse movements and clicks. Humans do not click on the same spot every time, nor do their mouse movements follow straight lines. By incorporating randomness into the click positions and movement paths, bots can simulate more authentic human interactions.

Simulating Human-Like Scrolling

Implementing human-like scrolling and interactions is crucial. This involves mimicking the natural rhythm of scrolling through content, as well as engaging with various web elements in a non-linear manner. Such algorithms can help disguise automated scripts as organic user activity, making detection less likely.

Behavioral Variability

Introducing Delays

Another critical technique is to introduce delays between requests. This can be done by randomizing intervals using methods like time.sleep(random.uniform(min, max)), which emulates the time a human would typically take to process information before moving on to the next action.

User Simulation Techniques

Enhancing realism further involves simulating human-like behaviors such as random mouse movements, keyboard inputs, and varied scrolling patterns. By employing libraries like NumPy for randomness or ActionChains for dynamic typing speeds, bots can better mimic human actions.

Handling Anti-Scraping Technologies

Addressing CAPTCHAs and JavaScript Challenges

Bots must be prepared to handle JavaScript challenges and CAPTCHA systems commonly used to detect automation. Employing CAPTCHA-solving services, along with strategies to dynamically render JavaScript, is crucial for extracting data from web pages that heavily rely on such technologies.

Proxy Management

Utilizing Proxies and IP Rotation

Implementing proxy rotation and IP anonymization helps mask a bot’s IP address and distribute requests across multiple locations. This is vital for reducing the likelihood of being flagged or blocked by automated detection systems. Residential proxies are particularly useful, as they emulate genuine user behavior tied to real residential addresses, minimizing detection risks. By integrating these techniques, web scrapers can enhance their ability to operate stealthily, ensuring smoother and more effective scraping or testing activities while avoiding common challenges associated with automation detection.

AI Approaches to Mimicking Behavior

AI systems have made significant advancements in mimicking human behavior, particularly in the context of web scraping and navigation. Researchers have developed methods that infer navigation goals based on prior routes and predict subsequent actions, which can enhance the interaction between AI systems and users by making them more responsive and adaptive.

Human-like AI Development

The evolution of AI technologies has led to the emergence of systems that not only process data but also emulate human decision-making processes. The integration of machine learning and deep learning algorithms enables these systems to analyze and interpret human behaviors more effectively. For instance, supervised learning techniques are employed to categorize behaviors based on input data, allowing AI to recognize and replicate specific actions like clicking, scrolling, or filling out forms.

Mimicking Navigation and Decision-Making

One significant approach to mimicking human behavior is through imitation learning, where AI systems learn from datasets that consist of observations and corresponding actions taken by humans in a given environment. This enables AI agents to replicate human browsing patterns, even in complex and unpredictable scenarios. By understanding the context of a user’s actions, AI can enhance its performance in environments that demand a high level of adaptability, such as online navigation or interactive tasks.

Enhancing User Interaction

Integrating human-like traits, such as creativity and intuition, into AI systems has been shown to improve user engagement and experience. For example, AI systems that generate creative and contextually relevant responses can transform routine interactions into dynamic and engaging exchanges. The ability to make intuitive leaps during decision-making processes can further enhance the efficiency of AI applications in areas like finance and healthcare, where rapid and accurate responses are critical.

Challenges in Behavior Mimicking

Despite these advancements, challenges remain in effectively mimicking human browsing behavior. The inherent unpredictability of human actions makes it difficult for AI systems to consistently replicate realistic navigation patterns. Researchers are actively exploring advanced algorithms and methodologies to address these challenges, aiming for AI that can better understand and adapt to the nuances of human behavior online.