Web scraping has become an essential skill for data enthusiasts, developers, and businesses aiming to gather valuable insights from the web. Whether you’re looking to extract data for market research, sentiment analysis, or machine learning projects, Python offers robust tools and libraries that simplify the process. In this article, we’ll explore two highly recommended web scraping tools—Jupyter Notebook and Google Colab—and discuss how to leverage Python for effective web scraping.

Jupyter Notebook: Localhost Flexibility

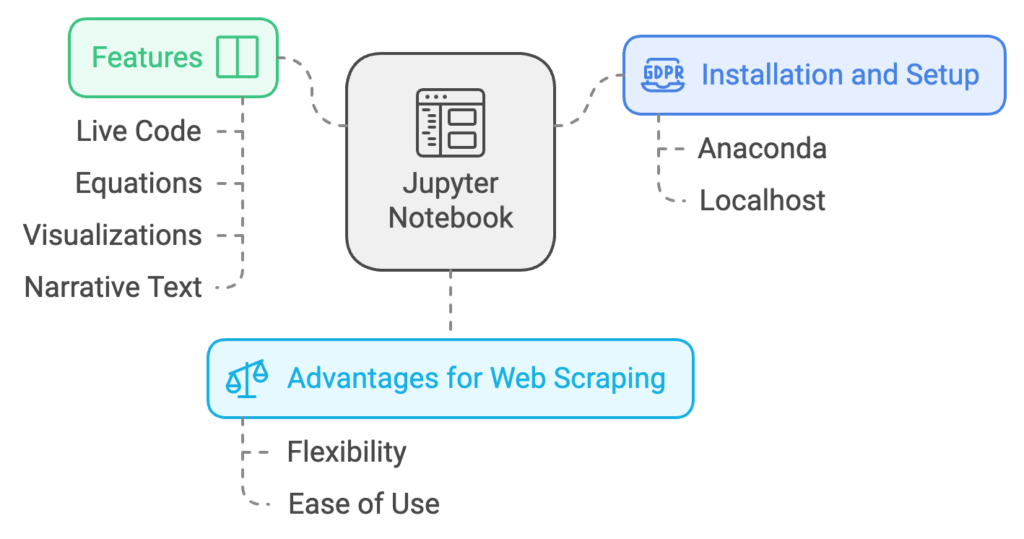

Jupyter Notebook is a popular open-source web application that allows you to create and share documents that contain live code, equations, visualizations, and narrative text. It’s particularly well-suited for web scraping tasks due to its flexibility and ease of use.

Installation and Setup

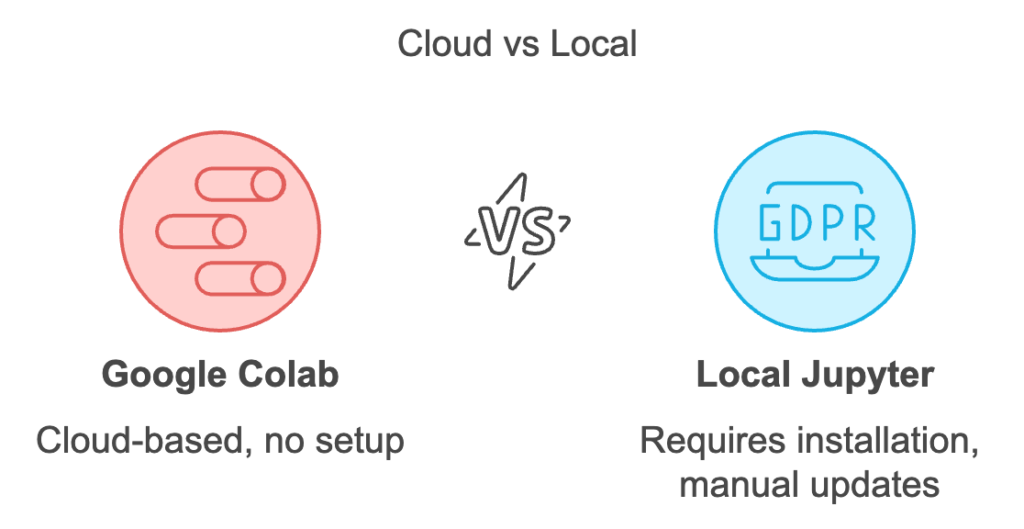

You can install Jupyter Notebook via Anaconda, a widely-used package manager and environment manager for Python. By running Jupyter on localhost, you gain more control over file operations and system resources compared to cloud-based solutions.

Advantages

- Complete Control: Running Jupyter Notebook on localhost provides you with full control over your environment, allowing for more granular management of files and directories.

- Customization: You can install and configure additional libraries and tools as needed, tailoring your setup to specific project requirements.

- Offline Access: Unlike cloud-based platforms, localhost environments can be accessed and used without an internet connection.

File Management Tip

For seamless file management across multiple devices, consider moving your Jupyter Notebook’s IPython folder into a cloud storage service like Dropbox or pCloud. This approach offers a cloud-based user experience, enabling you to access and edit your notebooks from anywhere.

Google Colab: Cloud-Based Convenience

Google Colab is a free, cloud-based Jupyter Notebook environment that requires no setup and runs entirely in the cloud. It’s an excellent option for those who prefer not to manage local installations and updates.

Advantages

- Ease of Use: With no installation required, Google Colab is accessible from any device with a web browser.

- Collaboration: Colab notebooks can be easily shared with team members, facilitating collaborative projects and real-time editing.

- GPU Support: Colab provides free access to GPU resources, which can significantly speed up data processing and machine learning tasks.

Limitations

While Google Colab is convenient, it does have some limitations compared to localhost environments. File system access is more restricted, and long-running tasks may be interrupted due to resource constraints.

Python: The Web Scraping Powerhouse

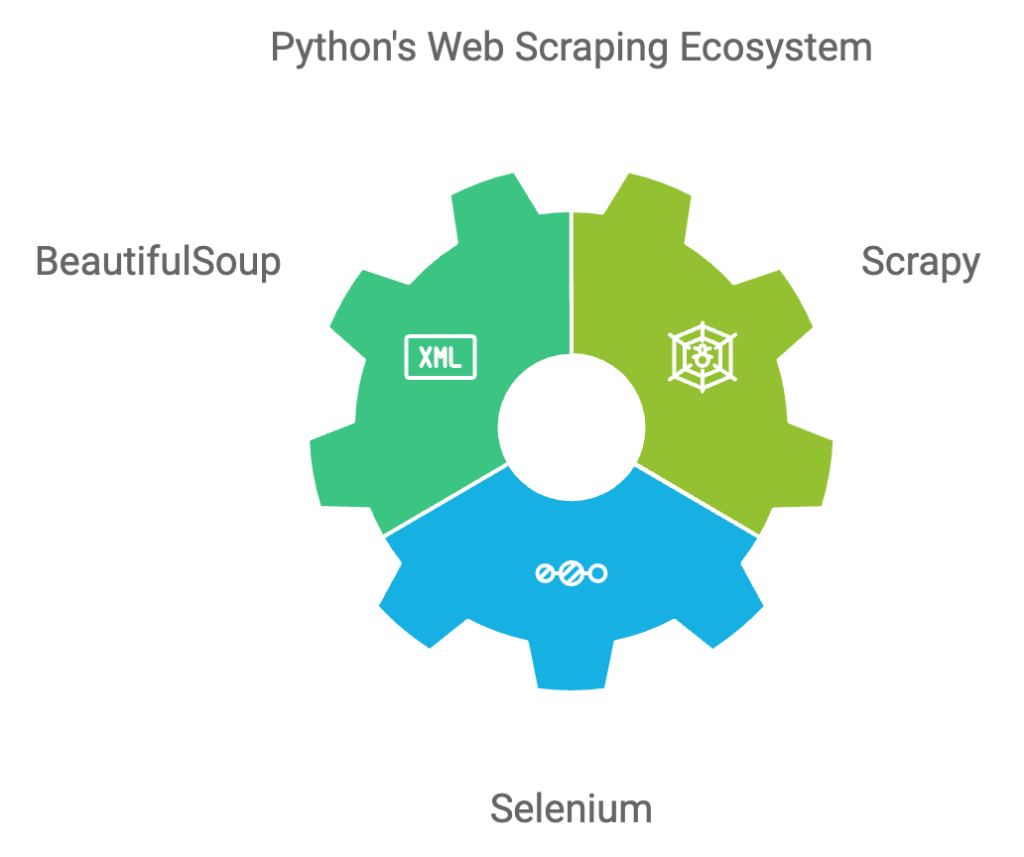

Python has emerged as a leading language for web scraping, thanks to its simplicity and powerful libraries such as BeautifulSoup, Scrapy, and Selenium. Its growing prominence in the AI and data science fields further cements its position as the go-to language for web scraping tasks.

Recommended Libraries

- BeautifulSoup: Ideal for parsing HTML and XML documents, making it easy to extract data from web pages.

- Scrapy: A fast, high-level web crawling and web scraping framework for Python, perfect for large-scale scraping projects.

- Selenium: Useful for scraping dynamic websites that require interaction with JavaScript elements.

A Detailed Explanation of How to Use Jupyter Notebook and Google Colab for Web Scraping

Using Jupyter Notebook for Web Scraping

- Installation:

- Step 1: Download and install Anaconda, which includes Jupyter Notebook, Python, and many essential libraries.

- Step 2: Open Anaconda Navigator and launch Jupyter Notebook from there. Alternatively, you can start it from the command line by typing jupyter notebook.

- Setting Up the Environment:

- Once Jupyter Notebook is running, create a new Python notebook by clicking “New” and selecting “Python 3”.

- Install necessary libraries such as BeautifulSoup, requests, and pandas by running the following commands in a cell:

!pip install beautifulsoup4 requests pandas

- Writing the Web Scraping Code:

- Import the required libraries:

import requests

from bs4 import BeautifulSoup

import pandas as pd- Fetch the webpage content using the requests library:

url = 'https://example.com'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

- Extract data from the webpage:

# Example: Extracting all headers from the page

headers = soup.find_all('h2') # Adjust tag based on the website structure

header_texts = [header.get_text() for header in headers]

print(header_texts)

- Save the data into a CSV file:

df = pd.DataFrame(header_texts, columns=['Headers'])

df.to_csv('scraped_data.csv', index=False)

- File Management:

- To manage your notebooks across multiple devices, move your Jupyter Notebook’s IPython folder to a cloud storage service like Dropbox or pCloud. This allows you to access your notebooks from anywhere, providing a cloud-based user experience.

Using Google Colab for Web Scraping

- Accessing Google Colab:

- Open Google Colab in your web browser. You will need a Google account to use this service.

- Setting Up the Environment:

- Create a new Python notebook by clicking “New Notebook”.

- Install necessary libraries by running the following commands in a cell:

!pip install beautifulsoup4 requests pandas

- Writing the Web Scraping Code:

- Import the required libraries:

import requests

from bs4 import BeautifulSoup

import pandas as pd

- Fetch the webpage content using the requests library:

url = 'https://example.com' # Replace with the actual URL you want to scrape

response = requests.get(url)

if response.status_code == 200:

print("Webpage fetched successfully!")

else:

print(f"Failed to fetch webpage. Status code: {response.status_code}")

exit()

soup = BeautifulSoup(response.content, 'html.parser')

title = soup.title.string if soup.title else "No title found"

print(f"Page Title: {title}")

paragraphs = soup.find_all('p')

for paragraph in paragraphs:

print(paragraph.get_text())

data = {

'Paragraphs': [paragraph.get_text() for paragraph in paragraphs]

}

df = pd.DataFrame(data)

print(df)

df.to_csv('scraped_data.csv', index=False)

print("Data saved to scraped_data.csv")Promotion: Web Scraping Service

If you’re looking to harness the power of web scraping but lack the time or expertise, our web scraping service is here to help. We specialize in extracting and delivering high-quality data tailored to your specific needs. Whether you’re conducting market research, monitoring competitor websites, or gathering data for machine learning projects, our team of experts will ensure you get the insights you need to stay ahead of the competition.

Related Articles: